Intelligent Health – powered by advances in computing power, Artificial Intelligence (AI) and a rapidly expanding corpus of patient data – holds enormous potential to improve health care systems and patient health in Europe. The Commission’s Communication on enabling the digital transformation of health and care in the Digital Single Market calls out the profound challenges that Europe’s healthcare systems are facing and the need for new technologies and approaches that better leverage data. By enabling the smart, efficient and safe use of patient data, AI-infused technologies are already transforming many aspects of contemporary healthcare across Europe, for the benefit of patients and the broader public.

There are gaps between where we are now and the future healthcare system that effectively leverages data and AI:

Although AI is already delivering clear benefits to the healthcare sector, it is clear that we have some critical work to do to ensure AI-infused healthcare technologies continue to be developed and deployed responsibly.[/caption]

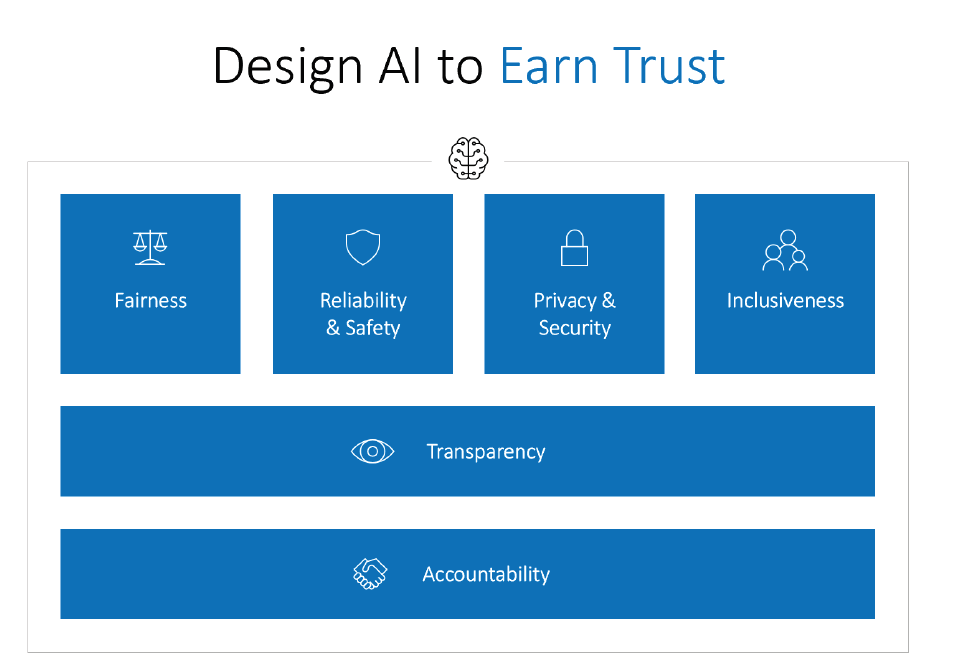

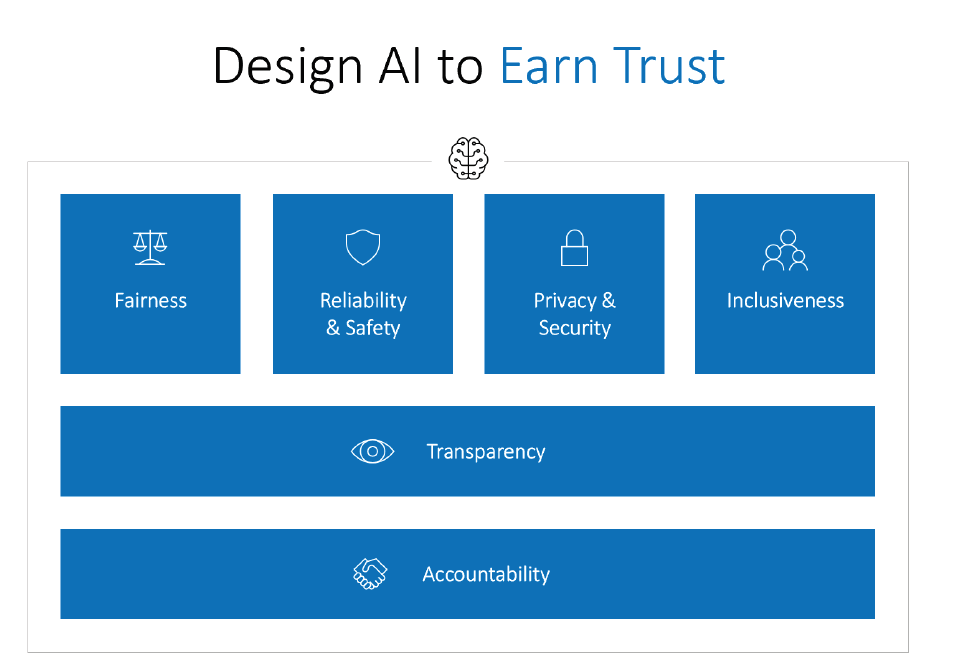

To protect people and maintain trust in technology, the development of AI should be rooted in a commitment to 6 key principles of fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. For these principles to be effective, however, they must be integrated into ongoing operations.

Although AI is already delivering clear benefits to the healthcare sector, it is clear that we have some critical work to do to ensure AI-infused healthcare technologies continue to be developed and deployed responsibly.[/caption]

To protect people and maintain trust in technology, the development of AI should be rooted in a commitment to 6 key principles of fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. For these principles to be effective, however, they must be integrated into ongoing operations.

- Organizational and technical barriers to data sharing and data use;

- Insufficient public trust and lack of a regulatory framework that promotes more access to and use of patient data for research purposes, while addressing privacy and security concerns; and

- A lack of clear rules, or even a tentative discussion framework, governing the ethical and social implications of patient data, AI and its growing use in the field of healthcare.

Technology is never neutralThese data silo challenges are only becoming more acute as patients themselves begin to amass their own repositories of health data from an array of new wellness and healthcare devices that are landing in the market. We are confident that a mix of adoption of market-driven technical standards and incentives that reward provider stakeholders for sharing and aggregating data could go a long way in reducing current barriers to data sharing and use in the healthcare context. While we must make sure that patient data is stored in a secure manner, we cannot let privacy or security be barriers to better use of an individual’s data for his or her own diagnosis and care. We need to better consider the genuine altruism among patients and enable research uses that allow broad societal benefits from research use of patient data. We must do more to show the value of data sharing for research use, showing citizens how their data already is being used for great benefit. The GDPR provides a timely opportunity and frame for these secondary use discussions, offering Member States discretion to set flexible rules for research uses of patient data. But equally importantly, we must ensure that regulatory frameworks governing the use of patient data enhance trust with citizens. To that end, it will be important to not only have discussions about how and under what circumstances patient data can be used, but also to have discussions that outline certain prohibited uses of patient data and identify methods that give patients enhanced controls. To do this, we will have to move beyond a narrow transactional view of data protection and delve into wider ethical discussion about sharing, aggregating and extracting insight from sensitive patient health information. While AI-infused technologies are already delivering benefits to patients around the world, these early applications of AI are only the front edge of a potentially much larger wave of healthcare AI technologies. With the advent of these AI healthcare solutions, the question has become, how do we respond to the ethical and legal challenges they will undoubtedly create? Society only will obtain the public health benefits of AI-infused technologies if these systems are developed and deployed responsibly. [caption id="attachment_25560" align="alignright" width="962"]

Although AI is already delivering clear benefits to the healthcare sector, it is clear that we have some critical work to do to ensure AI-infused healthcare technologies continue to be developed and deployed responsibly.[/caption]

To protect people and maintain trust in technology, the development of AI should be rooted in a commitment to 6 key principles of fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. For these principles to be effective, however, they must be integrated into ongoing operations.

Although AI is already delivering clear benefits to the healthcare sector, it is clear that we have some critical work to do to ensure AI-infused healthcare technologies continue to be developed and deployed responsibly.[/caption]

To protect people and maintain trust in technology, the development of AI should be rooted in a commitment to 6 key principles of fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. For these principles to be effective, however, they must be integrated into ongoing operations.

Summary of Policy Recommendations

To address organizational and technical barriers to data sharing and data use:- Promote the use of open standards to better enable technical interoperability and explore opportunities to create greater incentives for data sharing across organizations.

- Enable new technical solutions such as blockchain to improve data provenance, health information exchange and collaboration.

- Continue EU funding in digital health solutions to enable exchange of health information, and data provenance, including for PROMs.

- Analyze the implementation of research provisions under the GDPR in Member States, and where needed, amend laws or create more clarity through interpretations and guidance, to ensure innovative research projects don’t die on the vine.

- Demonstrate the value of a ‘data commons’ and build confidence in all stakeholders through visibility of success stories where data sharing and technological innovation have improved health outcomes.

- Explore and promote new models for data donation that encourage patients to more easily enable their data to be used for beneficial research purposes.

- Invest in technical solutions, including through research funding, to enable secure machine learning with multiple data sources/systems.

- Support commonly used global standards for the controls in national certification schemes for handling of patient health information and promote GDPR harmonized EU-wide certifications and accreditation schemes.

- Utilize emerging frameworks that will help ensure AI technologies are safe and reliable, promote fairness and inclusion and avoid bias, protect privacy and security, provide transparency and enable accountability.

- Invest in more research to explore and enhance methods that enable intelligibility of AI systems.

- Advance a common framework for documenting and explaining key characteristics of datasets.